27 Rights Groups Demand Zoom Abandon 'Invasive,' and 'Inherently Biased' Emotion Recognition Soft... - 4 minutes read

More than two dozen rights groups are calling on Zoom to scrap its efforts to explore controversial emotion recognition technology. The pushback from 27 separate groups represents some of the most forceful resistance to the emerging tech yet, which critics fear remains inaccurate and under tested.

In an open letter addressed to Zoom CEO Co-Founder, Eric S. Yuan, the groups led by Fight for the Future criticized the company’s alleged emotional data mining efforts as, “a violation of privacy and human rights.” The letter takes aim at the tech which it described as “inherently biased,” against non-white individuals.

The groups challenged Zoom to lean into its role as the industry leader in video conferencing to set standards which other smaller companies might follow. “You can make it clear that this technology has no place in video communication,” the letter reads.

“If Zoom advances with these plans, this feature will discriminate against people of certain ethnicities and people with disabilities, hardcoding stereotypes into millions of devices,” Fight for the Future Director of Campaign and Operation Caitlin Seeley George said. “Beyond mining users for profit and allowing businesses to capitalize on them, this technology could take on far more sinister and punitive uses.”

Though emotion recognition technology has simmered in tech incubators for years, it’s more recently gained renewed interest among major consumer facing tech companies like Zoom. Earlier this year, Zoom revealed its interest in the tech to Protocol, claiming it has active research on how to incorporate emotion AI. In the near term, the company reportedly plans to roll out a feature called Zoom IQ for Sales which will provide meeting hosts with a post meeting sentiment analysis which would try to determine the level of engagement from particular members.

Zoom did not immediately respond to Gizmodo’s request for comment.

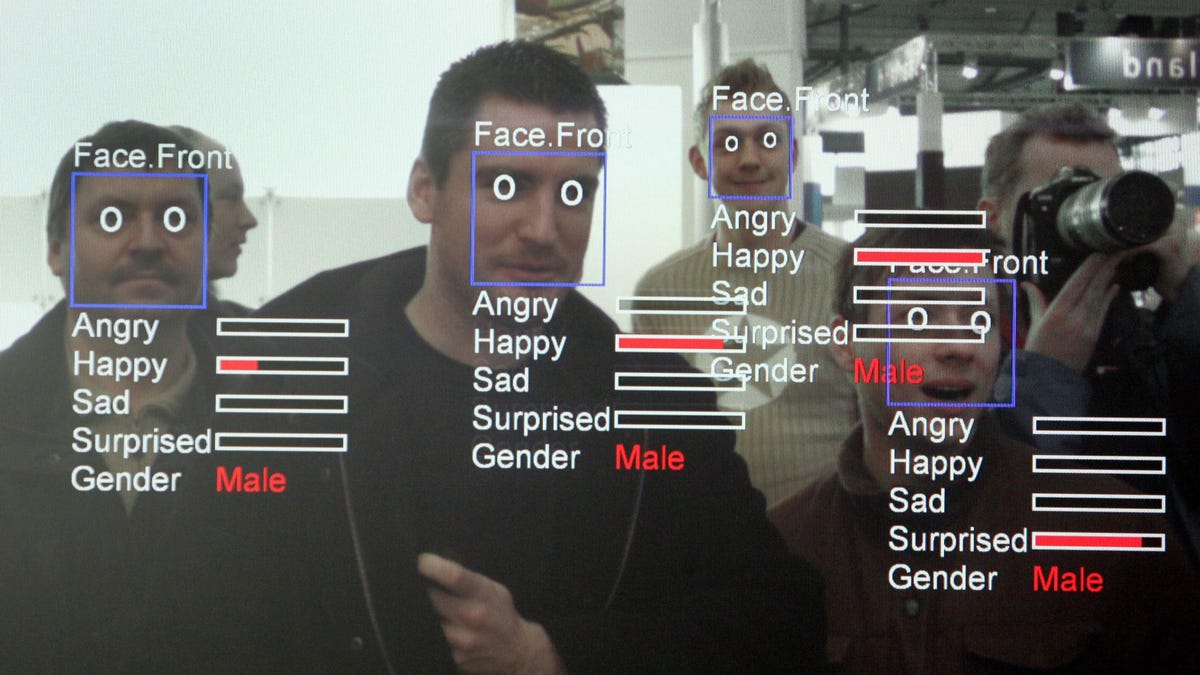

In her recent book Atlas of AI, USC Annenberg Research Professor Kate Crawford described emotion recognition, also referred to as, “affect recognition” as a type of offshoot of facial recognition. While the latter, more well known system attempts to identify a particular person, affect or emotion recognition aims to, “detect and classify emotions by analyzing any face.” Crawford argues there’s little evidence current systems can meaningfully make that premise a reality.

“The difficulty in automating the connection between facial movements and basic emotional categories leads to the larger question of whether emotions can be adequately grouped into a small number of discrete categories at all,” Crawford writes. “There is the stubborn issue that facial expressions may indicate little about our honest interior states, as anyone who has smiled without feeling truly happy can confirm.”

Those concerns have not been enough to stop tech giants from experimenting with the tech, with Intel even reportedly trying to use the tools in virtual classroom settings. There’s plenty of potential money to be made in this space as well. Recent global forecasts on emotion detection and recognition software predict the industry could be worth $56 billion by 2024.

“Our emotional states and our innermost thoughts should be free from surveillance,” Access Now Senior Policy Analyst Daniel Leufer, said in a statement. “Emotion recognition software has been shown again and again to be unscientific, simplistic rubbish that discriminates against marginalized groups, but even if it did work, and could accurately identify our emotions, it’s not something that has any place in our society, and certainly not in our work meetings, our online lessons, and other human interactions that companies like Zoom provide a platform for.”

In their letter the rights groups echoed concerns voiced by academics and argued emotion recognition tech in its current state is “discriminatory,” and “based off of pseudoscience.” They also warned of potentially dangerous unforeseen consequences linked to the tech’s rushed rollout.

“The use of this bad technology could be dangerous for students, workers, and other users if their employers, academic or other institutions decide to discipline them for ‘expressing the wrong emotions,’ based on the determinations of this AI technology,” the letter reads.

Still, the rights groups attempted to extend an olive branch and praised Zoom for its past efforts on integrating end-to-end encryption to video call and its decision to remove attendee attention tracking.

“This is another opportunity to show you care about your users and your reputation,” the groups wrote. “Zoom is an industry leader, and millions of people are counting on you to steward our virtual future. As a leader, you also have the responsibility of setting the course for other companies in the space.”

Source: Gizmodo.com

Powered by NewsAPI.org