Assessing Developer Productivity When Using AI Coding Assistants - 2 minutes read

We have all seen the advertisements and glossy flyers for coding assistants like GitHub Copilot, which promised to use ‘AI’ to make you write code and complete programming tasks faster than ever, yet how much of that has worked out since Copilot’s introduction in 2021? According to a recent report by code analysis firm Uplevel there are no significant benefits, while GitHub Copilot also introduced 41% more bugs. Commentary from development teams suggests that while the coding assistant makes for faster writing of code, debugging or maintaining the code is often not realistic.

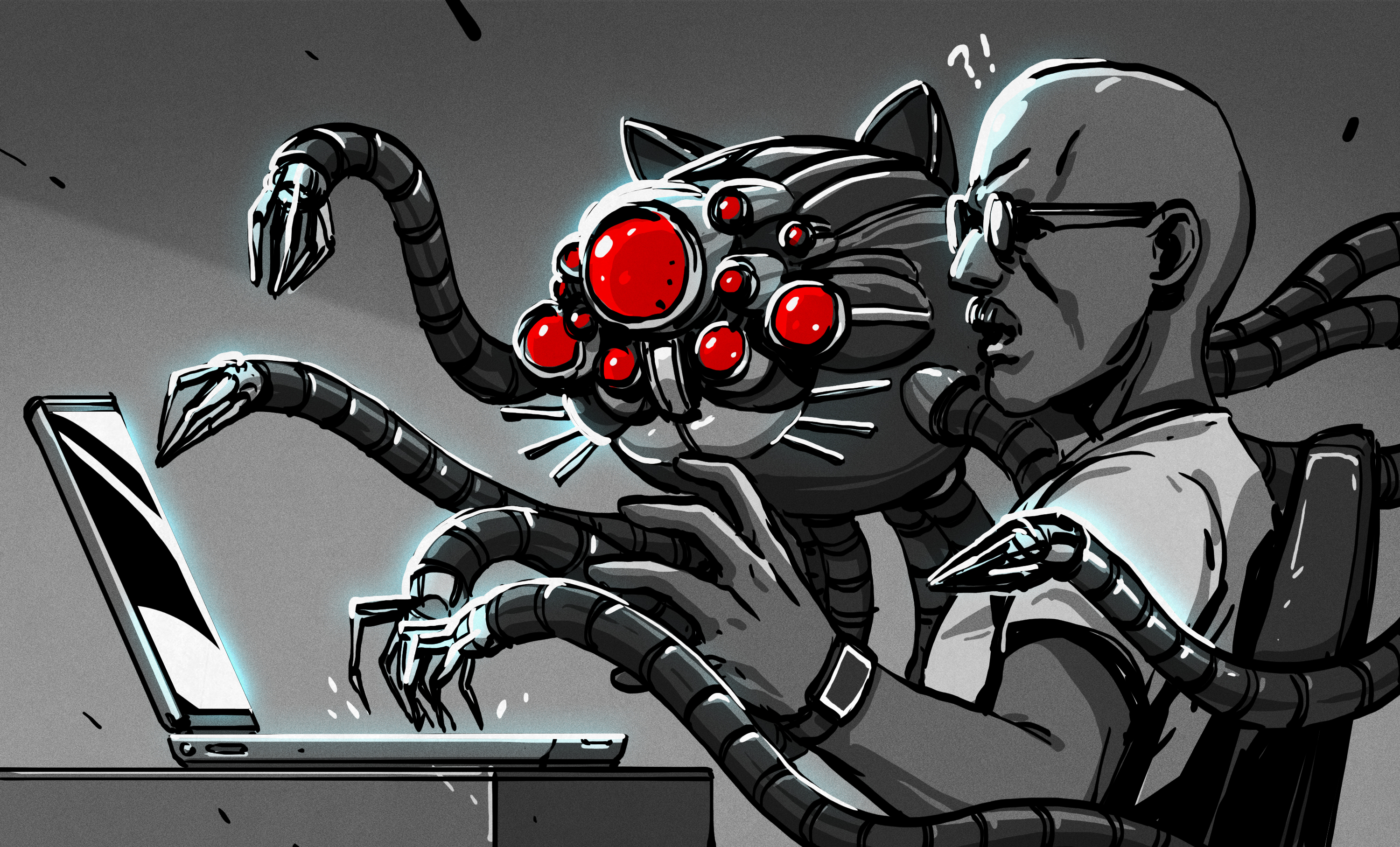

None of this should be a surprise, of course, as this mirrors what we already found when covering this topic back in 2021. With GitHub Copilot and kin being effectively Large Language Models (LLMs) that are trained on codebases, they are best considered to be massive autocomplete systems targeting code. Much like with autocomplete on e.g. a smartphone, the experience is often jarring and full of errors. Perhaps the most fair assessment of GitHub Copilot is that it can be helpful when writing repetitive, braindead code that requires very little understanding of the code to get right, while it’s bound to helpfully carry in a bundle of sticks and a dead rodent like an overly enthusiastic dog when all you wanted was for it to grab that spanner.

Until Copilot and kin develop actual intelligence, it would seem that software developer jobs are still perfectly safe from being taken over by our robotic overlords.

Source: Hackaday

Powered by NewsAPI.org